It was a long-standing challenge to teach artificial intelligence to comprehend and execute tasks solely through verbal or written instructions. A groundbreaking discovery has now emerged from researchers at the University of Geneva, who published their findings in Nature Neuroscience on Monday. The paper has detailed an unprecedented AI model that not only excels at tasks but can also communicate with another AI in a purely linguistic manner, enabling the latter to replicate the tasks.

Humans have a special talent for learning new things just by hearing or reading about them, and then explaining them to others. This ability sets us apart from animals, which usually need lots of practice and can’t pass on what they’ve learned.

In the world of computers, there’s a field called Natural Language Processing that tries to copy this human skill. It aims to make machines understand and respond to spoken or written words. This technology uses artificial neural networks, which are like simplified versions of the connections between neurons in our brains.

But, even though we’ve made progress, we still don’t fully understand all the complicated brain processes involved. So while computers can understand language to some extent, they’re not quite as good as humans at grasping all the intricacies.

So, teaching AI to understand human language and do tasks was really tough before this. But the UNIGE team has now created an AI model using something called artificial neural networks, which act like the brain’s neurons. This AI learned to do simple jobs like finding things or reacting to what it sees, and then it explained those tasks in words to another AI.

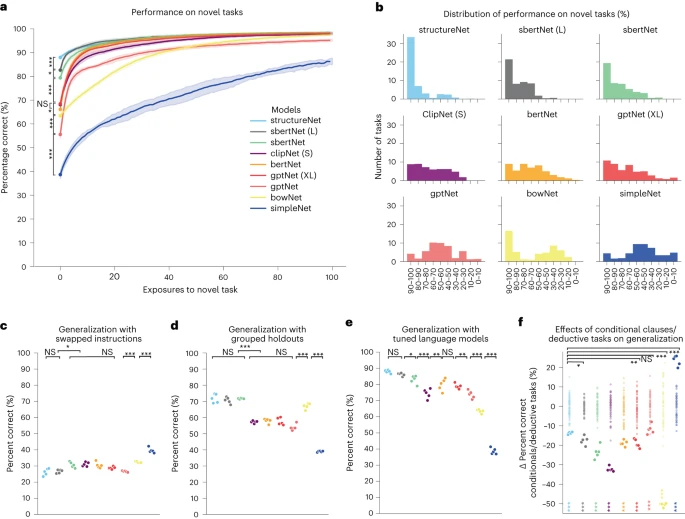

a,b, Illustrations of example trials as they might appear in a laboratory setting. The trial is instructed, then stimuli are presented with different angles and strengths of contrast. The agent must then respond with the proper angle during the response period. a, An example AntiDM trial where the agent must respond to the angle presented with the least intensity. b, An example COMP1 trial where the agent must respond to the first angle if it is presented with higher intensity than the second angle otherwise repress response. c, Diagram of model inputs and outputs. Sensory inputs (fixation unit, modality 1, modality 2) are shown in red and model outputs (fixation output, motor output) are shown in green. Models also receive a rule vector (blue) or the embedding that results from passing task instructions through a pretrained language model (gray). A list of models tested is provided in the inset. Description/Image Credit: nature.com

Dr. Alexandre Pouget, a professor at UNIGE’s Faculty of Medicine, said this was a big deal because while AI can understand and make text or images, it’s not good at turning words into actions.

”Currently, conversational agents using AI are capable of integrating linguistic information to produce text or an image. But, as far as we know, they are not yet capable of translating a verbal or written instruction into a sensorimotor action, and even less explaining it to another artificial intelligence so that it can reproduce it,” Pouget said.

The AI model they built has a complex network of artificial neurons that mimic parts of the brain responsible for language.

”We started with an existing model of artificial neurons, S-Bert, which has 300 million neurons and is pre-trained to understand language. We ‘connected’ it to another, simpler network of a few thousand neurons,” explains Reidar Riveland, a PhD student in the Department of Basic Neurosciences at the UNIGE Faculty of Medicine, and first author of the study.

In the experiment’s initial phase, the researchers trained the AI to mimic Wernicke’s area, responsible for language comprehension. Then, they moved to the next stage, where the AI was taught to replicate Broca’s area, aiding in speech production.

Remarkably, all of this was done using standard laptop computers. The AI received written instructions in English, such as indicating directions or identifying brighter objects.

Once the AI mastered these tasks, it could explain them to another AI, effectively teaching it the tasks. This was the first instance of two AIs conversing solely through language, according to the researchers.

”Once these tasks had been learned, the network was able to describe them to a second network — a copy of the first – so that it could reproduce them. To our knowledge, this is the first time that two AIs have been able to talk to each other in a purely linguistic way,” says Alexandre Pouget, who led the research.

This breakthrough could have a huge impact on robotics, according to Dr. Pouget. Imagine robots that can understand and talk to each other, making them incredibly useful in factories or hospitals.

Dr. Pouget believes this could lead to a future where machines work together with humans in ways we’ve never seen before, making things faster and more efficient.

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024