A Dense Introduction

Long Short-Term Memory (LSTM) is a neural network architecture modeling temporal sequences. It’s a type of recurrent neural network (RNN). It means that LSTM is capable of learning long-term dependencies. They learn from sequences of data; for example, text, audio, or time series data. An LSTM network is able to remember information for long periods of time. That’s because LSTM network has a special type of memory cell, a “forget gate”. The forget gate allows the network to forget residual information.

LSTM network’s usage includes NLP tasks such as machine translation and text classification. In machine translation, the input is a sequence of words in one language. And the output is the translation of those words into another language. The LSTM network can learn the mapping from the input sequence to the output sequence. Long short-term memory time series helps in the analysis of the past to predict future data trends. Time-series analysis is key to analyzing data where the order of observations is important. Many real-world phenomena are time-series data, such as stock prices, energy consumption, and the weather.

The most popular algorithmic combination for LSTM network training is the combo of the Back Propagation Through Time(BPTT) algorithm, and the Real Time Recurrent Learning(RTRL) algorithm.

How Long can LSTM last?

LSTM can last thousands of timesteps due to its ability to maintain long-term memory. For example, in a stock market chart, one timestep may be a 1-minute candle, or even a 1-day candle. The amount of time; seconds or minutes, doesn’t have much to do with how long an LSTM lasts. The memory cell can remember information for a long period of time through the introduction of recurrent connections. This allows LSTM to remember and use context from earlier in the sequence when making later predictions. The forget gate decides what information to keep in the cell state and what to forget. Meanwhile, the input gate decides what new information to add to the cell state.

LSTM networks have purposes in a variety of fields, including machine translation, image captioning, and stock prediction. In this article, we will focus on the usage of LSTM networks with time series data in different fields. To make it fair, the most enormous usage fields of LSTM will get larger sections.

Stock Market Prediction

The uttermost use of Long Short Term Memory is in the financial markets. Using LSTM networks to predict stock market movements is gaining huge popularity. There are two main ways to use LSTM for stock prediction:

a. To predict the direction of the stock market (up/down). Analyzing the historical data, and using the last few time steps as input to predict the next time step.

b. To predict the stock price after a given time. More specific LSTM networks, such as the Gated Recurrent Unit (GRU), play a role.

LSTM networks such as RNN have been very successful in stock market prediction. Some platforms using RNN for stock prediction are TensorFlow, Keras, and MXNet. Market Prediction accuracy of over 90% is still not possible with LSTM. However, with the right amount of training data, LSTM can be very accurate. Any good stock prediction platform will use a variety of techniques including statistical analysis to make predictions.

1. LTSM Stock Prediction with Github

Github is one of the most popular code-sharing platforms for developers and data scientists. A ton of resources are available on github for stock prediction using LSTM. And as the site releases updates frequently, it is easy to find the latest codes and implement them. Especially as the stock market conditions are ever-changing, using the latest codes can be beneficial.

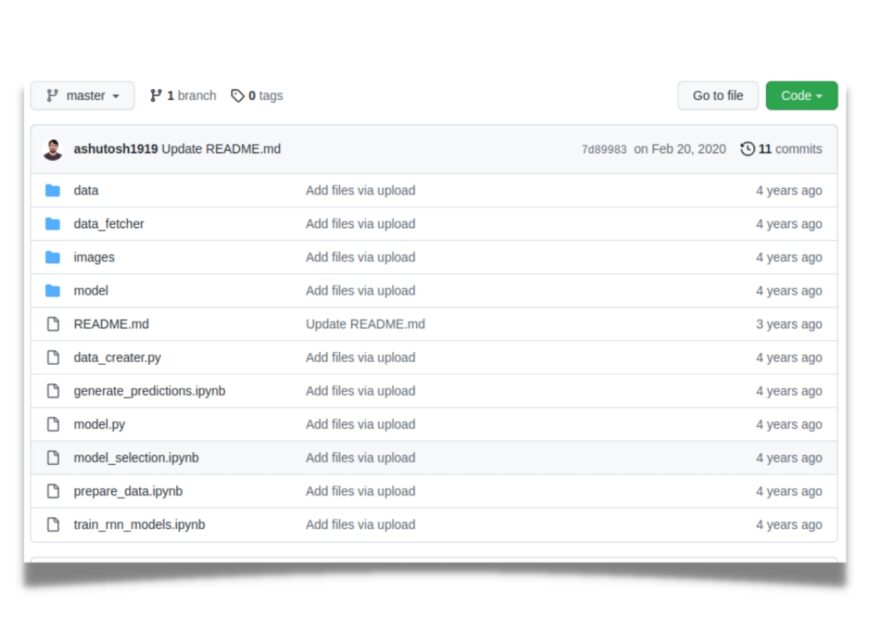

Github/ashutosh1919

The author ashutosh1919 has not used pre-built models, instead trained “LSTM NN models”, for 32 companies. The data is from 1 Nov 2016 to 31 Oct 2018. They develop the code in a step-by-step manner, with each step adding more functionality.

- Develop the python file downloader.py which fetches stock data from the Yahoo Download API.

- Write the data_creater.py file which contains classes and functions to download data, normalize and process data, feature selection, simple sequence, etc. The downloaded data is stored in the data directory, with each company’s symbol having its own directory.

- Data visualization – using the prepare_data.ipynb notebook, observing the volatility distribution and range.

- After that, develop the model.py file. It contains the classes and functions to: build the model, select the best model, select the hyperparameters, predict the output, and show the graph for the output.

- Then train on different layers of RNNs, namely a bidirectional LSTM layer, a fully connected layer, a drop.

Their error rate is less than 1% using LSTM Networks.

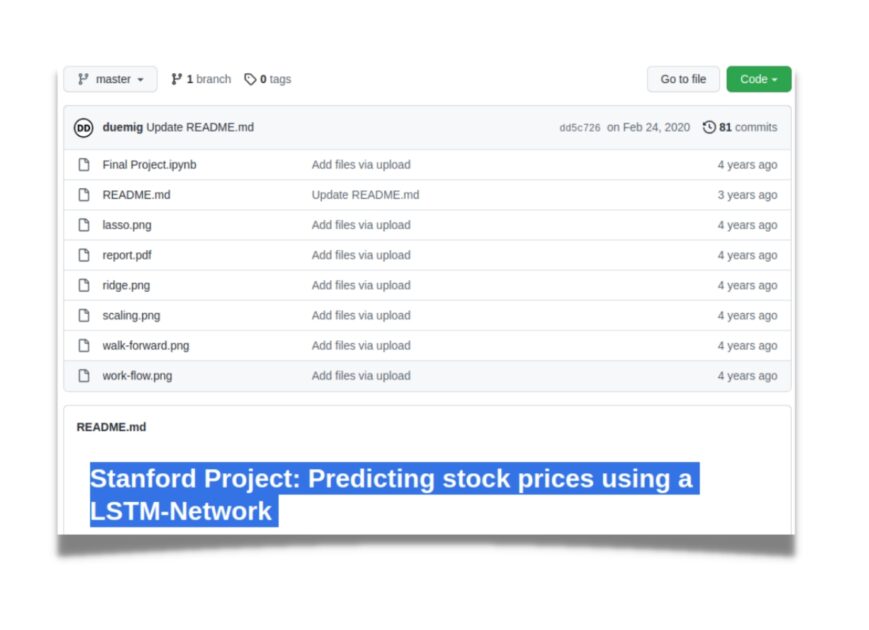

Stanford Project

The Stanford study found that the LSTM network outperformed the logistic regression in terms of accuracy. For example, they state that the LSTM network was able to more efficiently predict the movements of the stock market. This study takes into account the time series price-volume data. This is important because it can help to improve the accuracy of the predictions.

Related Post: A Brief Guide on How to Build your Own AI

2. LTSM Stock Prediction with Kaggle

Using Tensorflow, this platform does something like this: First, import the data from the CSV file. Then, split the data into training and testing data. After that, reshape the data into X=t and Y=t+1. Then, finally, build the model. It does not stop there. Afterwards, we need to fit the model. For that, predict the length of consecutive values from a real one, and plot the results. The dates, symbols, open, close, low, high, volume, are all used in the process.

3. Investment Bots and Signals that Use LTSM

Platforms like seekingalpha, TrendSpider, Benzinga Pro, etc. have been using LSTM for stock prediction. Long-term dependencies are really important for stock prediction. So many factors affect stock prices. The political situation, economic situation, and sentiment analysis are just a few. LSTM is really good at learning long-term dependencies.

Also, LSTM can be very useful for sequence prediction. The importance of sequence prediction in the stock market is immense. For example, a candlestick chart is a sequence of data points. When you are trying to predict the stock’s future direction, you’re essentially trying to predict the next sequential data point.

Seekingalpha use LSTM networks for stock prediction. The process is more reliable than the human brain and will likely yield better results. However, one prominent argument is that feature engineering requires high-level acumen. Therefore, it is ultimately the make-or-break for predicting stock prices accurately.

Sentiment Analysis

Another usage of LSTM networks is for Sentiment analysis. Sentiment analysis classifies the polarity of a given text. Like, whether a given text is positive, negative, or neutral. For that, developers train a model on a labeled dataset. Then they use it to predict the sentiment of the new, unseen text.

Good at Handling Imbalanced Datasets

LSTM networks are also good at handling imbalanced datasets. This is common in sentiment analysis, as there are often more negative examples than positive ones. LSTM networks can learn to identify the rarer positive examples, even in an imbalanced dataset.

Remembering the context

The sentiment of a text often depends on the context. Individual words only play a little role. The role of LSTM, and its sub-networks, is to provide context to individual words. And with that, sentiment of the text gets crystal clear.

A Form of Memory

LSTM networks have a “memory” that can remember information for long periods of time. This is what makes them ideal for sentiment analysis. LSTM networks are able to remember the context of the text. Furthermore, it also allows them to provide accurate predictions.

Example of Using LSTM in sentiment analysis

We can not mention all steps here as the article will be gigantically long. However, we will try to show the starting and ending processes in a Nutshell.

First Step: Data Visualization

# read data from text files

with open(‘data/reviews.txt’, ‘r’) as f:

reviews = f.read()

with open(‘data/labels.txt’, ‘r’) as f:

labels = f.read()

# get rid of punctuation

reviews = reviews.lower() # lowercase, standardize

all_text = ‘’.join([c for c in reviews if c not in punctuation])

# split by new lines and spaces

reviews_split = all_text.split(‘

’)

all_text = ‘ ’.join(reviews_split)

# create a list of words

words = all_text.split()

# print stats about data

print(‘Number of reviews: ‘, len(reviews_split))

print(‘Number of unique words: ‘, len(set(words)))

# print first review and its label

print(reviews_split[0])

print(labels.split(‘

’)[0])Second Step: Convert to lowercase

# convert to lower case

reviews = reviews.lower()Third Step: Remove punctuation

# remove punctuation

reviews = reviews.lower() # lowercase, standardize

all_text = ‘’.join([c for c in reviews if c not in punctuation])Fourth Step: Create a list of reviews

# split by new lines and spaces

reviews_split = all_text.split(‘

’)

all_text = ‘ ’.join(reviews_split)

# create a list of words

words = all_text.split()The further steps include tokenizing, analyzing the reviews’ lengths, padding, truncating, splitting the data into training, validation, and test sets, creating data loaders and batching, defining the LSTM network architecture, defining the model class, and training the network.

After all the steps are complete results will look something like this:

Results

test_review = ‘The simplest pleasures in life are the best, and this film is one of them. Combining a rather basic storyline of love and adventure this movie transcends the usual weekend fair with wit and unmitigated charm.’

# call function

seq_length=200 # good to use the length that was trained on

predict(net, test_review, sequence_length=seq_

length)

# print custom response based on whether test_review is pos/neg

print(‘positive’) if result else print(‘negative’)

positive

# test negative text

test_review_2 = ‘This movie was horrible. The acting was terrible and the film was not interesting. I would not recommend this movie.’

# call function

seq_length=200 # good to use the length that was trained on

predict(net, test_review_2, sequence_length=seq_length)

# print custom response based on whether test_review is pos/neg

print(‘positive’) if result else print(‘negative’)

negative

# test neutral text

test_review_3 = ‘This movie was ok. Not amazing, but not horrible. I would not recommend this movie.’

# call function

seq_length=200 # good to use the length that was trained on

predict(net, test_review_3, sequence_length=seq_length)

# print custom response based on whether test_review is pos/neg

print(‘positive’) if result else print(‘negative’)

negative

# test positive text

test_review_4 = ‘This movie was great. The acting was amazing and the film was interesting. I would recommend this movie.’

# call function

seq_length=200 # good to use the length that was trained on

predict(net, test_review_4, sequence_length=seq_length)

# print custom response based on whether test_review is pos/neg

print(‘positive’) if result else print(‘negative’)

positive

As you see above, the sentiment analysis showed neutral review as negative too. That’s because as you see above, it was programmed to believe that anything except a positive review is negative.

Language Translation

Translating languages is difficult for machines to handle. Language is a complex, nuanced tool that humans use to communicate an incredible amount of information. LSTM has shown its use in language translation tasks many times. Here is an example of seq2seq LSTM from keggle/harshjain123. Talking about Google’s translate uses NMT system, a type of neural machine translation that uses an LSTM to read and interpret text.

Geometric Deep Learning

Geometric deep learning is a branch of machine learning dealing with geometric-structure-data. Such data include images and 3D shapes. Geometric deep learning algorithms learn to represent data in a high-dimensional space and are useful for object detection.

For example, the LSTM network can take a 3D shape input. Let’s say the shape of a vase. The network must learn to represent the 3D shape of the vase in a high-dimensional space. And then, uses the representation it learned, to classify the shape (vase) into one of a number of categories, such as “flower vase”, “table vase”, or “floor vase”.

Indeed, the technical term “geometric” refers to the fact that the LSTM network uses the geometry of the data, in order to learn a representation of the data. In other words, the LSTM network uses the shape of the data, to learn a representation of the data.

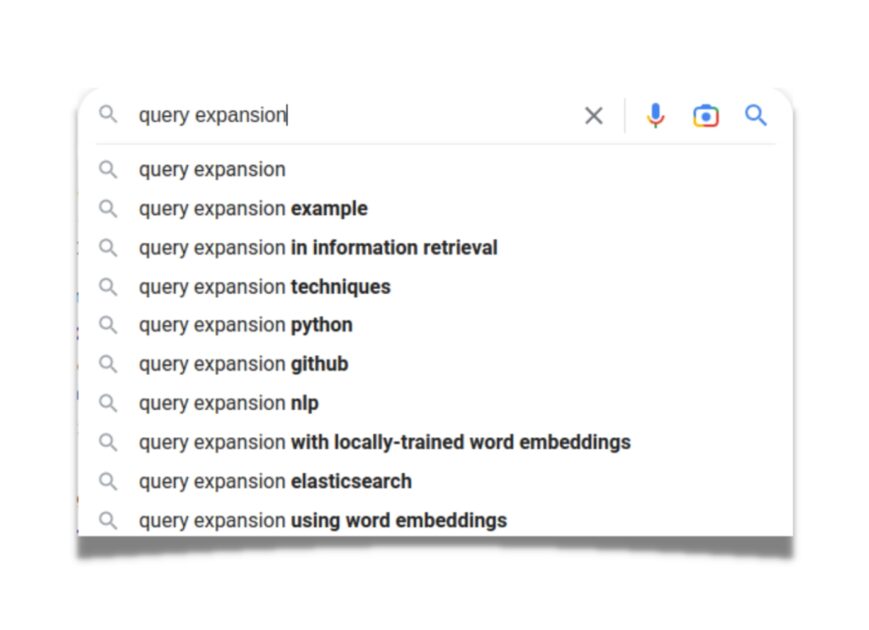

Query Expansion

Query expansion is a sub-field of information retrieval. The main idea is to add new terms to a user’s query to improve retrieval performance. And the challenge is to automatically decide which terms to add, and with what weights. Yes, LSTM can even assign weights to the new terms, which help improve retrieval performance.

There are two types of query expansion: 1) term-based expansion and 2) query-based expansion. In term-based expansion, LSTM generates new terms that are relevant to the user’s query. And in query-based expansion, it generates a new relevant query. In Google’s search engine, they use a query-based expansion.

LSTM can do all these things due to its feedback mechanism. As it reads the query, it also stores information in its hidden state vector, as we discussed. In simpler terms, it stores information about what it has read so far, and what is the most likely outcome.

One interesting thing about LSTM is that it can remember information for long periods of time. So, even if a query is long, it can still generate new terms that are relevant to the user’s query.

Sequence Prediction

Sequence prediction is a difficult task, but LSTM networks have shown great promise in this area. In fact, it’s their very job. With the ability to store information about previous inputs and handle variable-length input sequences, LSTM networks are well suited to the task.

There are many applications for LSTM-based sequence prediction. For example, LSTM networks predict the next frame in a video. This is especially useful when the video is of something like a person walking. In such videos, the appearance of the person changes very slowly from frame to frame. LSTM networks can learn to predict the next frame given the past few frames. Additionally, they can also predict the upcoming action in a video game, and the next chess move.

Web Traffic

LSTM networks can predict the next time step in a sequence of web traffic data. Say you have a sequence of web traffic data representing the number of web visitors over time. You can use an LSTM network to predict the number of visitors at the next time step.

LSTM network also predicts the web search volume for a given query. You have a sequence of web search data, and want to predict the search volume for the next time step. LSTM network can make this prediction quite efficiently. Sites like Semrush and Wordstream use LSTM to do that.

Conclusion

Long Short-Term Memory is a great tool to have in the Machine Learning toolbox. LSTM is best used in specific circumstances where its strengths can be leveraged to the fullest. Not like a hammer to every problem; rather, like a chisel to a block of marble. As this machine learning network has proven itself multiple times, technical experts can confidently rely on it. Technical experts in fields from stock market prediction to natural language processing have much to gain from this powerful tool.

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024