Whenever artificial intelligence comes up in conversation, we often talk about the risks it poses, like the possibility of it being used as a weapon, the difficulty of controlling really advanced AI and the danger of cyberattacks powered by AI.

The nightmares finally appear to be turning into reality: a new report commissioned by the US State Department has cautioned that failure to intervene in the advancement of AI technologies could result in ‘catastrophic consequences‘.

Based on discussions and interviews with over 200 experts, including industry leaders, cybersecurity researchers, and national security officials, the report from Gladstone AI, released this week, highlights serious national security risks related to AI. It warns that advanced AI systems have the potential to cause widespread devastation that could threaten humanity’s existence.

Jeremie Harris, CEO and co-founder of Gladstone AI, said the ‘unprecedented level of access’ his team had to officials in the public and private sector led to the startling conclusions. Gladstone AI said it spoke to technical and leadership teams from ChatGPT owner OpenAI, Google DeepMind, Facebook parent Meta and Anthropic.

“Along the way, we learned some sobering things,” Harris said in a video posted on Gladstone AI’s website announcing the report.

“Behind the scenes, the safety and security situation in advanced AI seems pretty inadequate relative to the national security risks that AI may introduce fairly soon.”

The report has identified two main dangers of AI: the risk of it being used as harmful weapons and the concern of losing control over it, which could have serious security consequences.

“Though its effects have not yet been catastrophic owing to the limited capabilities of current AI systems, advanced AI has already been used to design malware, interfere in elections, and execute impersonation attacks,” the report reads.

AI is already making a big economic impact, according Harris. Harris said it could help us cure diseases, make new scientific discoveries, and tackle challenges we once thought were impossible to overcome.

The downside? “But it could also bring serious risks, including catastrophic risks, that we need to be aware of,” Harris cautioned.

“And a growing body of evidence – including empirical research and analysis published in the world’s top AI conferences – suggests that above a certain threshold of capability, AIs could potentially become uncontrollable.”

The report’s release has led to calls for immediate action from policymakers. The White House has emphasized President Biden’s executive order on AI as a crucial step in managing its risks.

White House spokesperson Robyn Patterson said President Joe Biden’s executive order on AI is the “most significant action any government in the world has taken to seize the promise and manage the risks of artificial intelligence.”

“The President and Vice President will continue to work with our international partners and urge Congress to pass bipartisan legislation to manage the risks associated with these emerging technologies,” Patterson said.

However, experts agree that more strict measures are needed to address AI’s potential threats.

About a year ago, Geoffrey Hinton, known as the “Godfather of AI,” left his job at Google and raised concerns about the AI technology he helped create. Hinton has suggested that there’s a 10% chance AI could lead to human extinction within the next 30 years.

Hinton and many other leaders in the AI field, along with academics, signed a statement last June stating that preventing AI-related extinction risks should be a global priority.

Despite pouring billions of dollars into AI investments, business leaders are increasingly worried about these risks. In a survey conducted at the Yale CEO Summit last year, 42% of CEOs said AI could potentially harm humanity within the next five to ten years.

Gladstone AI’s report highlighted warnings from notable figures like Elon Musk, Lina Khan, Chair of the Federal Trade Commission, and a former top executive at OpenAI about the existential risks of AI. Some employees in AI companies share similar concerns privately, according to Gladstone AI.

Gladstone AI also revealed that experts at cutting-edge AI labs were asked to share their personal estimates of the chance that an AI incident could cause “global and irreversible effects” in 2024. Estimates varied from 4% to as high as 20%, though the report noted these estimates were informal and likely biased.

The speed of AI development, particularly Artificial General Intelligence (AGI), which could match or surpass human learning abilities, is a significant unknown. The report notes that AGI is seen as the main risk driver and mentions public statements from organizations like OpenAI, Google DeepMind, Anthropic, and Nvidia, suggesting AGI could be achieved by 2028, although some experts believe it’s much further away.

Disagreements over AGI timelines make it challenging to create policies and safeguards. There’s a risk that if AI technology develops slower than expected, regulations could potentially be harmful.

Gladstone AI has strictly warned that the development of AGI and similar capabilities “would introduce catastrophic risks unlike any the United States has ever faced.” This could include scenarios like AI-powered cyberattacks crippling critical infrastructure or disinformation campaigns destabilizing society.

In addition, the report also raises concerns about weaponized robotic applications, psychological manipulation, and AI systems seeking power and becoming adversarial to humans. Advanced AI systems might even resist being turned off to achieve their goals, the report suggests.

The report strongly suggests establishing a new AI agency and implementing emergency regulations to limit the development of overly capable AI systems. Moreover, it calls for stricter controls on the computational power used to train AI models to prevent misuse.

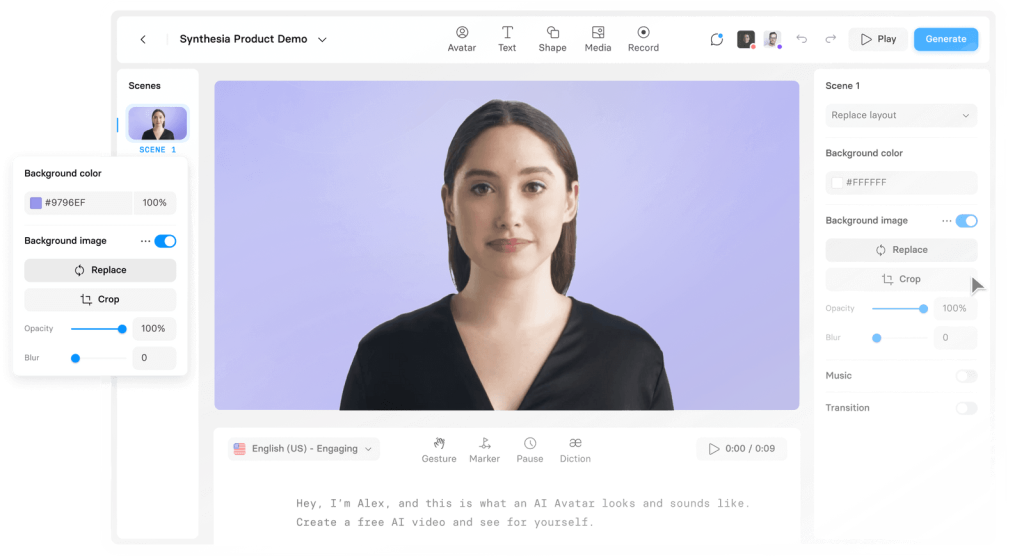

Irrespective of governmental discourse, AI’s takeover occurs through various channels—see how, in 2024, text brings forth lifelike speaking avatars.

Your least desired outcome at this moment: AI breaking free from the machine.

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024