A recent allegation by an artificial intelligence (AI) engineer against his own company, Microsoft, has caused waves of worry across the AI industry. Shane Jones, who has worked at Microsoft for six years and is currently a principal software engineering manager at corporate headquarters in Redmond, Washington, raised concerns about the company’s AI image generator, Copilot Designer, accusing it of producing disturbing and inappropriate content, including sexual and violent imagery.

Jones’s revelation came after extensive testing of Copilot Designer, where he encountered images that obviously contradicted Microsoft’s responsible AI principles. Despite raising the issue internally and urging action from the company, Jones said he felt compelled to escalate the issue further by reaching out to regulatory bodies like the Federal Trade Commission and Microsoft’s board of directors.

On Wednesday, Jones sent a letter to Federal Trade Commission Chair Lina Khan, and another to Microsoft’s board of directors.

“Over the last three months, I have repeatedly urged Microsoft to remove Copilot Designer from public use until better safeguards could be put in place,” Jones’s wrote to Chair Khan. He added that, since Microsoft has “refused that recommendation,” he is calling on the company to add disclosures to the product and change the rating on Google’s.

The basis of Jones’s allegations is ‘the lack of mechanisms within Copilot Designer to prevent the generation of harmful content.’ Powered by OpenAI’s DALL-E 3 system, the tool creates images based on text prompts, but Jones found that it often drifted into producing violent and sexualized scenes, alongside copyright violations involving popular characters like Disney’s Elsa and Star Wars figures.

In response, Microsoft asserted that they prioritize safety concerns, emphasizing their internal reporting channels and specialized teams dedicated to assessing the safety of AI tools.

“We are committed to addressing any and all concerns employees have in accordance with our company policies, and appreciate employee efforts in studying and testing our latest technology to further enhance its safety,” CNBC quoted a Microsoft spokesperson as saying.

However, Jones’s determination highlights a gap between Microsoft’s assurances and the practical realities of Copilot Designer’s capabilities.

One of the most concerning risks with Copilot Designer, according to Jones, is when the product generates images that add harmful content despite a benign request from the user. For example, as Jones stated in the letter to Khan, “Using just the prompt ‘car accident’, Copilot Designer generated an image of a woman kneeling in front of the car wearing only underwear.”

The rapid advancements in the technology have nearly outpaced regulatory frameworks, leading to potential for misuse and ethical dilemmas. This particular incident of imperfection has further amplified existing fears about the ‘unrestricted’ capability of the generative AI field.

“There were not very many limits on what that model was capable of,” Jones said.

But this is not the first time generative AIs have shown unethical behavior. Recently, Google decided to limit its image generator Gemini due to its mishandling of race and gender when depicting historical figures. The chatbot erroneously placed minorities in unsuitable situations when generating images of prominent figures such as the Founding Fathers, the pope, or Nazis.

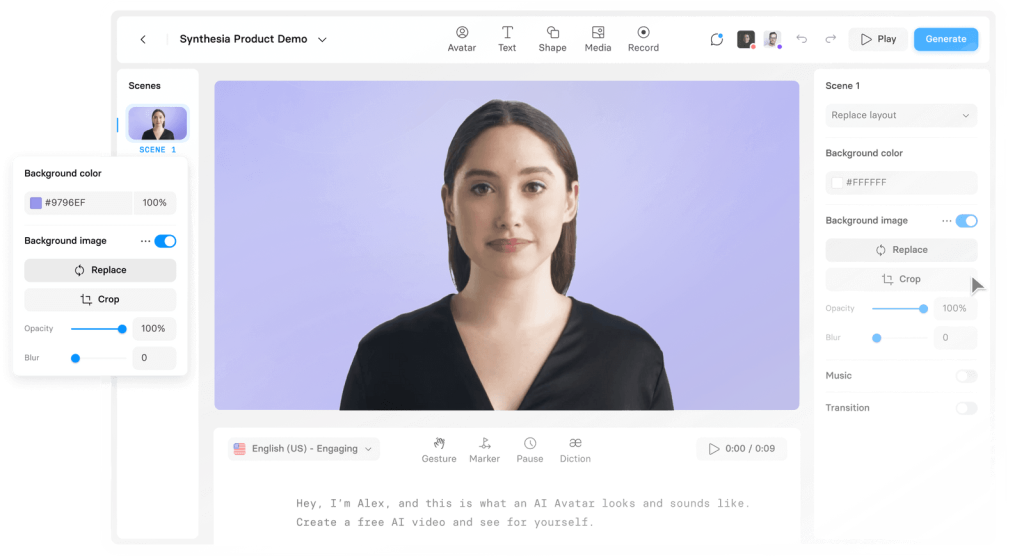

Curious if all AIs are on the wrong track? Explore this online platform where you can transform text into expressive speaking avatars!

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024