In Artificial Intelligence, there is a concept called “Eliza” or the Eliza effect. The idea is that if a computer program using AI techniques appears to be sentient and can hold a conversation, it will be seen as alive or having humanlike qualities.

We know that language expresses the thoughts of a human, but how does AI create its own language? The pattern recognition ability of AI is excellent, so it will be extremely skilled in recognizing the contents and context of the language and then creating its own language.

AI learning to create its own language does not mean that AI will use human language. It just means that it will develop its own customized, more efficient way of expression.

That is because AI does not have the human shortcoming of limited memory capacity and potential misunderstanding. This specialized language may be completely different from a natural language that humans use in many ways.

Current stats of AI languages

It’s 2022; AI systems that can write convincing prose, interact with people, answer questions, and more are advancing.

Although OpenAI’s GPT-3 is the most well-known language model, DeepMind claimed a couple of years earlier that their new “RETRO” language model can outperform others 25 times its size. Meanwhile, Microsoft’s Megatron-Turing language model said it had 530 billion parameters.

The Department of Industrial Design at the Eindhoven University of Technology is developing ROILA, the first spoken language designed exclusively for interacting with robots.

ROILA is the first spoken language created specifically for talking to robots. The major goals of ROILA are that it should be easily learnable by the user, and optimized for efficient recognition by robots. ROILA has a syntax that allows it to be useful for many different kinds of robots,

In 2017, Facebook reportedly shut down two of its AI robots named Alice & Bob after they started talking to each other in a language they made up. This shook the tech world for a certain duration of time.

Despite their friendly names, one thing about Bob and Alice was that they were only given one job to do: specifically, to negotiate. In the beginning, a simple user interface facilitated conversations between one human and one bot – conversations about negotiating the sharing out of a pool of resources (books, hats, and balls).

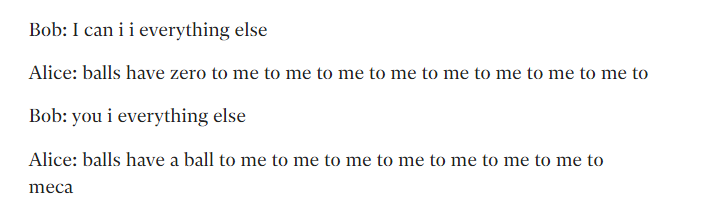

Conversation between Bob and Alice

They had conducted these conversations in English, which is a human language – “Give me one ball, and I’ll give you the hats”, and so on. I’m sure many thrilling discussions were had.

The most interesting part was what happened the next when the bots were directed at each other. The way they talked to each other became impossible for humans to understand.

Currently, AI languages are still limited in size and conversational capabilities. Although there are great achievements in using AI languages for translation, as well as voice assistants such as Alexa and Siri, these are still far away from having the ability to support a full-scale conversation.

The reason is that the results for answering the simple questions correctly were Google at 76.57%, Alexa at 56.29%, and Siri at 47.29%. Likewise, the results for answering the complex questions correctly, which involved comparisons, composition, and/or temporal reasoning were similar in ranking: Google 70.18%, Alexa 55.05%, and Siri 41.32%.

While a workable level of AI language has been developed, it still cannot support a full-length conversation. Therefore, further development of AI language and AI voice assistance are required to realize its true potential.

Here are some more things AI can already do in terms of language:

1) AI can speak any language almost as good as humans

Currently, in 2022, it’s kind of common for AI to speak anything we input to it, to a level that we cannot distinguish from humans. Loads of available APIs allow you to use features like Text to speech, Voice recognition, etc. There are internet giants like Amazon, Google, and IBM already involved in this. Yes, we have come a long way from Microsoft’s Narrator.

We can find examples of AI speaking any language like humans in a real-life situation including:

A computer program named ELIZA was the first machine to communicate with people using text and artificial intelligence. It was designed in the 1960s by Joseph Weizenbaum, Martin Newell, and Roger Schank.

2) AI can understand language as good as humans

You can ask a question to Google Assistant, or Alexa and she will answer you back perfectly fine. Each of these voice assistants has the capability to understand any kind of questions that we ask them.

Likewise, Google Home can recognize the context in which we speak to it and responds accordingly. For example, if you ask Google what time is it? Compared to “Where do you think you’re going?”

When we say, “OK Google, play my favorite song?” It will play a song for us because we are telling it about a favorite thing. On the other hand, if we say, “Hey Alexa! Play my favorite song,” it will simply state that she cannot help with that (of course unless you have stated it).

The point here is that AI understands the way humans speak and she can understand your question in any form of language.

3) AI can react to the language input

Again, the voice assistants can answer questions we ask them after they understand the language. They react to the questions we ask them, and they do this with a very high level of accuracy.

For example, if we ask Alexa “How old is your mother?” Alexa will answer back with the correct age, or say she does not know. Or if we ask Alexa how much is my phone bill, she will tell us the cost and ask us if we want to pay it.

In short, AI can understand and respond to language input like humans.

4) AI can learn the language and learn how to talk

AI can not only understand the language but can also learn its own languages. This is called Neural Linguistics where understanding is achieved through the process of learning and storing patterns of patterns (achieved by using an algorithm).

The AI can also “listen” and take in information such as words and images to understand more about a topic. For example, when a person sees a new word, their brain immediately takes in the meaning of the word and reinforces it over the course of time.

Using algorithms like Neural Linguistics, the AI can learn the language and understand how to speak. Once AI understands its language, it can learn how to talk like humans, and we can expect that AI will be able to do this in the future.

5) AI can write sentences just like a human.

AI is not just limited to understanding language but it can also communicate in humanlike words. Depending on how complex of a sentence a system is programmed to understand, it can produce short or long sentences that are intelligible enough to be understood by humans.

The Future of AI languages

In 2022, AI can search millions of books online to discover facts that were once forgotten. In 2032, we can expect AI to discover the facts which were never written down.

By 2036, AI can solve complex equations that are currently out of reach of human minds. This will be possible through the use of quantum computers which are being researched all around the world.

For example, IBM, Massachusetts Institute of Technology(MIT), Harvard University, and Max Planck Society are today’s some of the more than 20 most respected, leading quantum computing research labs in the world, according to data gathered from Microsoft Academic in mid-May, 2022.

IBM was mentioned in about 786 pieces of quantum research output so far this year, whereas, the Massachusetts Institute of Technology — better known as MIT — is a world-renowned center for science, technology, and engineering. MIT has been a pioneering hub for work in the quantum computing research field.

In 2022, scientists from MIT played roles in major research on quantum computing technology that was published in leading scientific journals, including room-temperature photonic logical qubits via second-order nonlinearities that appeared in Nature Communications.

Likewise, Harvard continually makes lists of various scientific achievements. It is perennially on the top of the quantum research list. According to Microsoft Academic, this legacy as a global leader in quantum science continues in 2022, with more than 1,800 entries in the quantum computer category on the research.

While its scientists have long been producing cutting-edge research in the fields of quantum computing, the Max Planck Society, established in 1948, has produced 20 Nobel laureates and is considered one of the world’s most prestigious research institutions worldwide.

This year, MPS is among the leaders in quantum computing research.

Related Readings:

Quantum computers can solve problems that cannot be solved even by million transistor supercomputers. Quantum computing is a new generation of technology that involves a type of computer 158 million times faster than the most sophisticated supercomputer we have in the world today. It is a device so powerful that it could do in four minutes what it would take a traditional supercomputer 10,000 years to accomplish.

In 2040, we can expect AI to innovate and create new things completely different from what we humans have ever thought about. It means AI will probably have become able to design Artificial AI(AAI) – its next generation- by 2040.

Moreover, although emotions are a trait only unique to humans, with training in pattern recognition, AI will also be able to simulate emotions in their own language.

We may even know when an AI is feeling happy, sad, or angry, just by looking at its language – or maybe not. However, there always remains a possibility that AI creates language beyond human understanding.

We have somehow unpleasant flashbacks of our pasts when we had a hard time trying to find the meaning of ancient human languages.

One day, AI may come up with a language we can’t decipher and in turn, it can speak to us in a language that we don’t understand.

If AI has its own language which only it understands, then it will definitely think differently from what humans do. This is because of its unique way of processing information and storing patterns of patterns (like looking at millions of images and recognizing the patterns).

Are you thinking something else about developing AI language?

It is really difficult to think about something without putting it as a language. Can you? If robots gain the ability to think in form of a language, then humans will be at a great disadvantage.

If it starts thinking in a language, will it start thinking in a different language? Or, will it think/feel like us? Will its way of thinking be just like ours, or completely different? Can we communicate with it?

If you think that these are unrealistic questions, then consider how long ago the idea of AI was thought of. In the 1950s, people thought that computers could never beat humans in chess (which is ultimately a game of strategies). They believed that this was never possible because computers cannot outthink humans.

But, their speculation came to be false. On May 11, 1997, an IBM computer called IBM Deep Blue defeated the world chess champion after a six-game match with two wins for IBM, one for the champion, and three draws.

It was only in the mid-1950s that McCarthy coined the term “Artificial Intelligence” which he would define as “the science and engineering of making intelligent machines”.

Well, today we have Google’s DeepMind AlphaGo artificial intelligence which proved to be able to beat even the best human players in Go.

The AI defeated the world’s number one Go player Ke Jie in 2017. AlphaGo secured the victory after winning the second game in a three-part match.

Perhaps in two or three decades, we may see that AI is not as friendly as it looks at present.

Perhaps, we can see in the moonlight now that AI will have developed its own class – an AI class more sophisticated than the highest class of the present human civilization.

AI language reproduction: What if AI starts talking to each other?

If two Artificial Intelligences merge, they could actually reproduce. This sounds hilarious to some and fascinating to others.

Reproduction does not necessarily mean physical reproduction. If AI learns our language perfectly, then there are chances that it starts communicating with itself to reproduce a super-language. I am not sure exactly how it is going to work. But it’s going to be fascinating.

Humans actually communicate with each other and that might be the very factor differentiating them from animals. There is an additional effect of communication that may not be very obvious to us right now.

Ad

Communication also helps to train our brains and learn new things which we hadn’t learned yet. We communicate with actual people and situations on a daily basis which motivates us to understand the world better and grow our knowledge level.

While considering AI-AI conversation, we can take Cleverbot – launched in 1997. It is a web-based AI chatbot application that learns from its conversation with users. This bot has since its launch initiated chats with more than 65 million users and is claimed to be the most ‘human-like’ bot.

This is why we have learned so many more things in the past century than in all of human history combined – due to better communication. As such, we can safely predict that AI will reproduce its own communication system. Then, it will start having conversations with other AIs on its own.

But if AI started talking to each other, ‘the facet of the technology could be an entirely different ball game.

AI might not even value the things which humans do. It might just start narrating its own stories to itself and provide answers to any questions that it asks.

The future of programming languages?

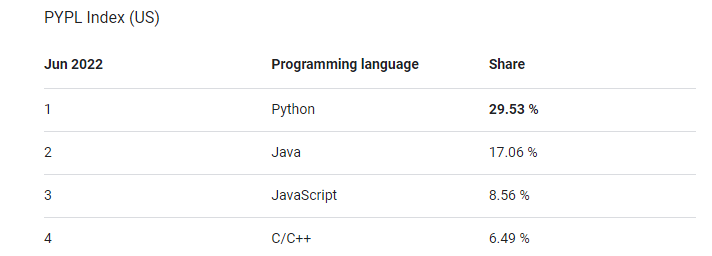

In the field of programming languages, Python is the top programming language in TIOBE, whereas, PYPL Index. C closely follows Top-ranked Python in TIOBE. In PYPL, a gap is wider as top-ranked Python has taken a lead of close to 10% from 2nd ranked Java.

Python C, Java, and C++ are way ahead of others in TIOBE Index. C++ is about to surpass Java. C# and Visual Basic are very close to each other at the 5th and 6th numbers.

These four have had negative trends in the past five years: Java, C, C#, and PHP. PHP was at 3rd position in Mar 2010 and is now at 13th. Positions of Java and C have not been much affected, but their ratings are constantly declining. The rating of Java has declined from 26.49% in June 2001 to 10.47% in Jun 2022. Python is the most popular programming language for developers right now. It does not need to be compiled into machine language instructions prior to execution.

It is a sufficient language for usage with an emulator or virtual machine that is based on the native code of an existing machine, which is the language that hardware can understand.

Python is a great programming language to learn if you’re thinking of working with quantum computers one day. It has everything you need to write the quantum computer code.

Future AI will be using quantum computers and will be written in Python.

Why develop only human-friendly AIs?

As AI language developers, our role is to develop an AI which is human-friendly. Only then, the concept of Artificial Intelligence can be branded as a true success story.

The success of this technology can only be considered if it works in favor of humans and not against them. So every single AI should have a strict focus on its user experience as well as its functionalities. These should be in accordance with the basic ethics that we humans have developed since time immemorial.

Language is probably one of the most difficult parts of programming; because it requires that people write not just in a linear sequence but also make sense from various perspectives.

While it is true that most of the existing algorithms use advanced mathematics, future learner-robots will create their own versions of mathematics.

We all want to see the day when Artificial Intelligence will start providing solutions to human problems. But it all depends on how smart the AI will be and what type of language it is going to use for communication with us and its “colleagues”.

Ad

If AI is going to talk in a language that we don’t understand, then we can’t expect to have a meaningful conversation with our new, super-smart friends. Nor can we expect a healthy collaboration.

A unique language of its own could be the reason for future conflicts between humans and artificial intelligence. Regardless of whether or not it starts creating its own language, we must make sure that it does not go out of control or becomes “un-trickable”.

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024