Household robots are getting better at doing lots of different jobs around the house, like cleaning up messes and serving food. They learn by copying what people do, but sometimes they struggle when things don’t go exactly as planned.

At MIT, scientists are working on an innovative idea to help robots deal with these unexpected situations better. They’ve come up with a way to mix the robot’s movements with what a smart computer program knows, called a large language model. This helps robots understand how to break tasks down into smaller steps and handle problems without needing a human to fix everything.

Yanwei Wang, a student at MIT, says, “We’re teaching robots to fix mistakes on their own, which is a big deal for making them better at their jobs.”

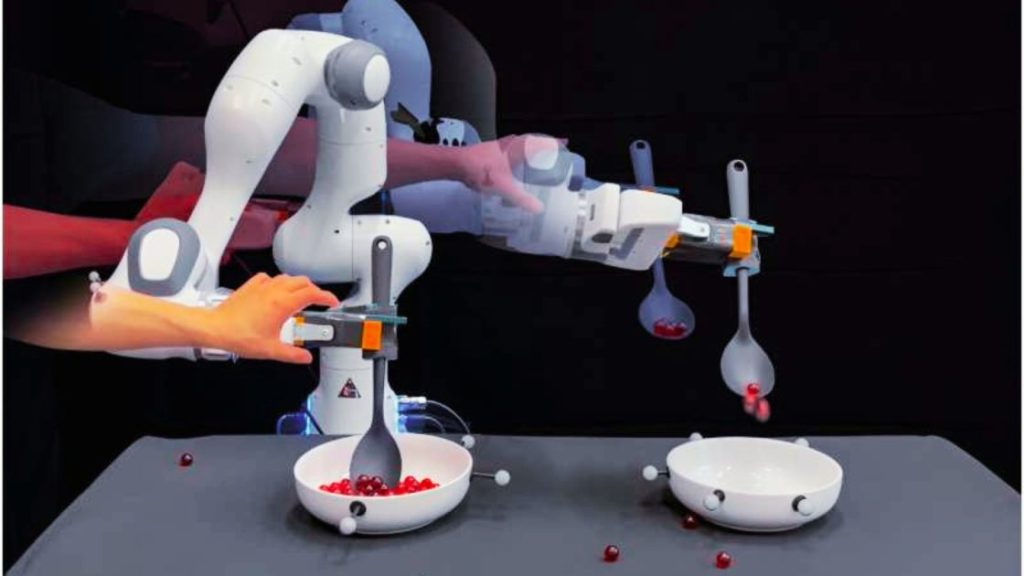

In a study they’re going to talk about at a conference, the MIT team shows how this idea works using a simple task: scooping marbles from one bowl to another. By using the smart computer program, they can figure out the best steps for the robot to take. Then, they teach the robot how to recognize these steps and adjust if things go wrong.

Instead of stopping or starting over, the robot can now keep going even if something doesn’t go as planned. This means robots could become really good at doing tough jobs around the house, even when things get messy.

Wang adds, “Our idea can make robots learn to do tricky tasks without needing humans to step in every time something goes wrong. It’s a big step forward for household robots.”

What if they get as smart as us

The idea of household robots getting as smart as humans could be a game-changer. Common sense, the ability to handle everyday situations wisely, is a big part of how humans think. If robots could do this too, it would certainly change a lot of things.

It’s enough just to think of robots fitting right into our daily lives, understanding what’s going on, and making decisions like we do. They’d know what we need, adjust to new situations, and do things in ways that keep us safe and happy. This wouldn’t just make things run smoother, but it would also make us trust robots more.

On the other hand, some big questions prevail. As robots become more like us, they start to feel less like tools and more like companions, don’t they? That means we’ll expect them to act ethically, just like we do. So, we’ll need to make sure they’re programmed to always put people first.

Any threat from common-sense capable robots?

Not in the near future, but the risk factor remains high. In fact, emotionally aware robots are just around the corner, according to Wang. He’s confident that these robots will soon be able to fix their mistakes on their own, without humans needing to step in. Wang is excited about the progress being made in training robots using teleoperation data. This data is crucial for their special algorithm, which turns it into advanced behaviors. This means robots can handle tough jobs with ease, even when things get tricky.

And there’s this factor to consider: jobs. If robots take over a lot of tasks, some people might lose their jobs. That means we’ll need to rethink how we work and acquire new skills to adapt to a reality where robots are integrated into our daily lives.

In addition, the sad incident at a vegetable packaging plant in Goseong, South Korea on November 8, 2023, has demonstrated how risky it can be to use industrial robots at work.

Even though the robot wasn’t reported as super smart with any kind of common sense, it accidentally hurt a worker who was checking on it. The event reminds us of the potential threats posed by such innovations and underscores the importance of enforcing strict safety rules when using AI or robots in the workplace.

Referrences:

https://news.mit.edu/topic/machine-learning

https://techxplore.com/news/2024-03-household-robots-common.html

https://www.sciencedaily.com/releases/2024/03/240325172439.htm

https://openreview.net/forum?id=qoHeuRAcSl

https://iclr.cc/

https://www.messecongress.at/lage/?lang=en

- AI-Powered PCs: Overhyped Trend or Emerging Reality? - August 21, 2024

- Princeton’s AI revolutionizes fusion reactor performance - August 7, 2024

- Large language models could revolutionize finance sector within two years - March 27, 2024