Artificial intelligence (AI) and machine learning (ML) have become ubiquitous worldwide, but their true capabilities remain mysterious. One of the compelling areas still under exploration is the autonomous practice and refinement of skills by robots. Traditionally, this process required human oversight, but researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and The AI Institute have recently developed a transformative solution. Their “Estimate, Extrapolate, and Situate” (EES) algorithm, introduced at the Robotics: Science and Systems Conference, allows robots to self-optimize, potentially increasing their effectiveness in factories, households, and hospitals. This breakthrough raises both exciting possibilities and profound questions: specifically, what are the consequences if robots learn too much?

The EES algorithm represents a major advancement in robotic learning. Traditionally, robots needed extensive human programming to operate effectively in specific environments. However, with EES, robots can now independently practice and enhance their skills. In an unfamiliar warehouse setting, a robot using EES can, for example, learn to pick items from a shelf and improve its performance with each attempt. It’s good news that this autonomous learning ability could revolutionize industries by reducing the need for constant human supervision.

However, the implications of such technology extend far beyond efficiency gains. The ability for robots to learn independently in real-time brings us closer to a future where machines might operate with a level of autonomy that challenges our current understanding of control and safety.

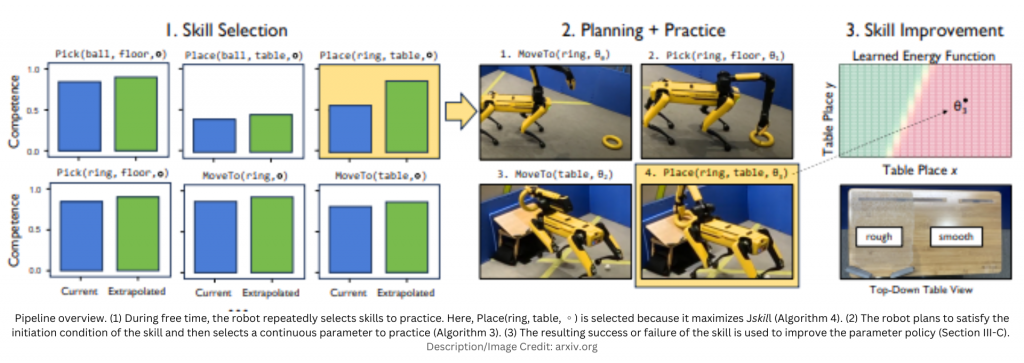

The importance of EES lies in its ability to optimize the learning process. Robots equipped with this algorithm can focus on specific tasks that need improvement, refining them through practice. During trials at The AI Institute, Boston Dynamics’ Spot, robot demonstrated the efficacy of this approach. The robot learned to place a ball on a slanted table and sweep toys into a bin in just in just a few hours -remarkably faster than previous methods. This kind of improvement in autonomous learning is not just a technological achievement; it’s a glimpse into a future where robots could continually evolve, adapting to new tasks with minimal human input.

Yet, this capability raises important ethical and safety concerns: what if robots learn to perform tasks in ways that their creators did not anticipate or fully understand? The possibility of unintended consequences looms large, especially as robots become more ingrained in everyday settings like homes and hospitals. The more they learn, the more unpredictable their behavior could become, potentially leading to scenarios where robots make decisions that conflict with human values or safety protocols. Obviously, privacy is crucial in human-robot interactions, as emphasized in a 2023 study by the National Library of Medicine. The NLM scientists are also highly concerned that advanced and automated robots can collect and process vast amounts of personal data, such as eating and sleeping habits, which, if misused, could lead to privacy violations.

At the heart of these concerns is the concept of “robot overlearning.” As robots practice and refine their skills, there’s a risk they could develop abilities that extend beyond their intended scope. This might sound like science fiction, but the reality is that as robots gain more autonomy, the line between programmed behavior and learned behavior becomes increasingly blurred. If robots begin to operate outside of their programmed parameters, it could lead to situations where they act in ways that are difficult to predict or control.

This concern is not just theoretical. The rise of language models (LLMs) in robotics, which has revolutionized how robots perceive and interact with the world, illustrates this point. LLMs allow robots to understand and process language, enabling them to perform tasks that require a level of commonsense reasoning previously thought impossible for machines. An example of this includes LLMs that help robots understand that ‘a book belongs on a shelf, not in a bathtub,’ a seemingly simple yet fundamentally important distinction.

However, as robots integrate LLMs more deeply, they start to exhibit behaviors that are increasingly complex and less transparent. The concept of using language as the backbone of robot intelligence opens the door to a new range of capabilities, but it also raises the question of control. If a robot can generate code to perform tasks or engage in complex decision-making processes independently, how do we ensure that it doesn’t cross boundaries that could lead to harm or ethical dilemmas?

There is ‘little’ doubt that the integration of robotics and LLMs is advancing the development of robots from mere tools to autonomous agents capable of making decisions. This transformation is epitomized by innovations such as SayCan, where robots use LLMs to plan and execute tasks in a more human-like manner. The introduction of concepts like Code as Policies and Language Model Predictive Control further illustrates how robots are becoming more adept at learning and adapting on their own, which raises the need for ensuring their actions remain aligned with human intentions.

This is why, as robots become more autonomous, the challenge is no longer just about making them work; it’s about ensuring they operate safely and ethically. The potential for robots to overlearn and develop capabilities that are not fully understood by their creators is a double-edged sword. On one hand, it could lead to unprecedented levels of efficiency and innovation. On the other, it could result in robots that operate outside of human control, with potentially dangerous consequences.

Undoubtedly, the innovation brought by EES and LLMs in robotics is impressive. However, given previous AI misconduct and biases, we must approach autonomous learning with extra caution by integrating effective measures to reduce risks and unintended effects.

- Can LLMs generate better research ideas than humans? A critical analysis of creativity and feasibility - September 25, 2024

- Artificial Super Intelligence: Transcending Imagination - September 15, 2024

- Artificial General Intelligence: Start of a New Era - September 8, 2024