Introduction

Data collection’s role in machine learning is like that of collecting the foundation blocks of a building. It provides the necessary insight and information needed to develop, train and optimize models. Data shapes the model, and its quality and accuracy depend on the data set. For example, biased AI algorithms can result from biased datasets. Collecting high-quality, diverse data is key. But high-quality data is difficult to obtain. Here is a list of roles data collection plays in ML:

1. Collecting Data to Train models.

Okay, this one is obvious. The more data, and the more relevancy, the better. For example, you are training an image classifier; your dataset should comprise images of various types, sizes, and orientations. And to train a chatbot, you need data that includes conversations and topics. Furthermore, any missing data should be filled with accurate and relevant data. For example, if you are dealing with numerical data and some entries are missing, use an average of the other entries. Even complex models, such as OpenAI’s GPT-3, Google’s BERT, and Microsoft’s Turing models, need data to train on. The data collection process relies on AI. Yes, it’s using AI to create AI, as we’ve discussed in a previous article.

```python

import pandas as pd

import numpy as np

# Read the data

data = pd.read_csv('data.csv')

# Fill missing values with mean column values

data.fillna(data.mean(), inplace=True)

# Count the number of rows and columns in the dataset

row_count, col_count = data.shape

# Split the data into features and target

X = data.iloc[:, :-1]

y = data.iloc[:, col_count-1]

# Split the data into training and testing sets

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

```The above code snippet: reads data from a CSV file, fills missing values with the mean of the column, splits the data into features (X) and target (y), and finally splits the data into training and testing sets. It means that you have collected the data, pre-processed it, and split it for training a machine learning model.

2. Collecting Data to Optimize Model Performance.

Once the model is trained, it’s not that the task is over! You also need to optimize its performance. And the data comes into play here as well. You can use data to identify its strengths and shortcomings and use the insights to make appropriate changes. Again, the quality of collected data is so important. For example, if you have a customer churn model and the data set is biased toward one type of customer, the model might over- or under-predict churn. No matter how much tuning you do, the model won’t be accurate. Keep an eye on stats like accuracy, precision, and recall to identify any gaps or changes. To optimize the model, use data from various sources, such as customer surveys, call recordings, and other customer touchpoints.

```python

# Read the data

data = pd.read_csv('data.csv')

# Check the shape of the data

row_count, col_count = data.shape

# Split the data into features and target

X = data.iloc[:, :-1]

y = data.iloc[:, col_count-1]

# Use the model to make predictions

predictions = model.predict(X)

# Calculate accuracy and other metrics

from sklearn.metrics import accuracy_score

accuracy = accuracy_score(y, predictions)

# Check for any gaps or changes

if accuracy < 0.7:

# Collect more data

data = pd.read_csv('additional_data.csv')

# Retrain the model

model.fit(data)

```The above code snippet: not only reads data from a CSV file and splits the data into features and targets, but also uses the model to make predictions. It then calculates the accuracy and other metrics, and checks for any gaps or changes. If accuracy is low, it collects additional data and retrains the model. In this way, data collection helps optimize the model’s performance.

3. Collecting Data for Model Maintenance.

Once the model is trained and deployed, the data collection process should continue. Collecting data on model performance and predictions can help you identify and address any issues, such as bias and accuracy. Collecting user feedback, customer sentiment, and other related data can help you understand how the model is being used and how it can be improved. Any Machine Learning model’s primary goal should be to improve its accuracy over time, and data collection should be a repetitive, ongoing process.

```python

# Collect data from customers

data = pd.read_csv('customer_feedback.csv')

# Check the shape of the data

row_count, col_count = data.shape

# Check for any bias or accuracy issues

if accuracy < 0.7:

# Collect more data

data = pd.read_csv('additional_data.csv')

# Retrain the model

model.fit(data)

# Collect data from other sources

data_2 = pd.read_csv('data_from_other_sources.csv')

# Combine the data

data = pd.concat([data, data_2], axis=1)

# Retrain the model

model.fit(data)

```The above code snippet: collects data from customers, checks for any bias or accuracy issues, collects data from other sources, combines the data, and retrains the model. In this way, data collection helps maintain and improve the model’s performance over time.

4. Data Extension.

Data collection can open room for data extension. Some patterns exist, and some are created. Data extension is a process of creating patterns from existing data. Take image generators for example – they use AI to collect and labeled images, and even the recreated images are labeled and used to train the model to create new, completely unique ones.

```python

import cv2

# Read the data

data = pd.read_csv('data.csv')

# Split the data into features and target

X = data.iloc[:, :-1]

y = data.iloc[:, col_count-1]

while True:

# Generate new images

generated_images = []

for i in range(len(X)):

generated_image = cv2.imread(X[i])

# Modify the image

generated_image = cv2.blur(generated_image, (5, 5))

# Add the modified image to the list

generated_images.append(generated_image)

# Label the generated images

labels = [y[i] for i in range(len(X))]

# Add the generated images to the dataset

X = np.concatenate((X, generated_images))

y = np.concatenate((y, labels))

# Retrain the model

model.fit(X, y)

```The above code snippet: reads the data, splits it into features and targets, generates new images, labels them, adds them to the dataset, and retrains the model. In this way, data extension helps in creating more data, which can be used to train the model.

As you can see, we used python code snippets to illustrate the process of data collection for machine learning. What we did in the first section was to collect data to train the model. In the second section, we used data to optimize the model’s performance. The third one was about using the data to maintain and improve the model. And in the fourth section, we used data to extend the model. Understanding and correctly utilizing data collection, you see, is the key to creating powerful and accurate models that can solve real-world problems.

Data Collection Tools for Data Engineers

Keeping up with the latest data trends is the most important factor in successful data collection. 70% of the world’s data is user-generated; as such, collecting filtered data for Machine Learning models is essential. Not only because user-generated data can be unstructured and noisy, but also because it can contain incorrect or obsolete information. It’s also the most difficult to cope with trends because the data needs to be constantly updated and monitored. And for that live data, it’s necessary to collect them from reliable sources only. But first and foremost, it’s worth noting that experienced data engineers use specific techniques and tools to collect, clean, sort, and store data.

Here are the best tools for collecting only filtered data for a machine learning model:

Wrangling Tools

Wrangling tools like Trifacta, Talend, and Pentaho help clean and organize data from different sources like spreadsheets, databases, and web applications. They have powerful visual data preparation capabilities that allow data engineers to quickly identify and discard unwanted data. Not to mention, they are also effective in transforming data into a more usable format.

Data Lake

A data lake is a centralized repository of raw, structured, semi-structured, and unstructured data. After data collection, this helps data engineers store and access data from the same place. This makes it easier to search, combine, and filter the data according to their needs.

Data Science Platforms

Platforms like RapidMiner and KNIME offer an intuitive environment for creating data models and visualizations. These platforms can help data engineers identify patterns, trends, and outliers in data. The tools they provide are powerful for filtering data and generating insights from it.

Business Intelligence Tools

Business intelligence tools such as Tableau and Qlikview help organizations quickly access and analyze data from multiple sources. These tools are great for data engineers because they provide an interactive interface for creating sophisticated data visualizations. They also allow data engineers to filter, sort, and aggregate data for efficient data collection.

Cleansing Tools

Data cleansing tools like Tamr and OpenRefine can detect, remove, and replace corrupted or incorrect data. They use unique algorithms to detect patterns, and outliers, and replace them with valid data.

Data Mining Tools

Data mining tools like RapidMiner and Weka can extract meaningful information from large datasets. They help data engineers filter, sort, and update the collected data from multiple sources. After data collection of customers, for example, data mining tools can identify purchasing patterns. Apart from filtering the data, they also provide insights like customer churn rate and product popularity.

ETL Tools

Extract, Transform, and Load (ETL) tools like Alooma and Fivetran help move data from different sources into a single location. They allow data engineers to filter, clean, and transform data quickly.

Another important thing to consider is the security of data. It’s necessary to ensure that no minors were harmed, no personal data is leaked, and no malicious actors are involved in the data collection process. For that, data engineers need to use secure data transfer protocols and encryption technologies like SSL and TLS, and use tools like Dataguise to detect any suspicious activities.

What does Collecting High-Quality Data Mean?

For a machine learning model, high-quality data means data that is not only accurate but complete, consistent, and up to date. In fact, in ML, one single data point can make the difference between accuracy and failure. For example, take linear regression; a single outlier can affect the model’s accuracy. In AI-powered devices like self-driving cars, a missing data point can have catastrophic results. 85% of machine learning projects fail amid insufficiency, inaccuracy, and inconsistency in data. And that’s mostly even after using the tools mentioned above; due to a lack of understanding in choosing fundamental data sources for the purpose. Here are the sources of data collection for machine learning:

1. Scraping Blogs for Informative Data

- Natural Language Processing

- Topic Modeling

- Text Classification

Blogs are great data collection methods for current trends, new products and services, customer feedback, and more. To train the machine learning model, the data must be structured and labeled, which can be done through web scraping. As web scraping is legal, it’s a popular choice for ML engineers. Furthermore, filtering the blogs for relevant content, and based on the blog’s authority and accuracy, makes it a great data source. Written data contains the most amount of misinformation. In fact, stats show that more than 85% of text data collected is either wrong; outdated, or incomplete.

2. Social Media Scraping

- Sentiment Analysis

- Brand Monitoring

There are two different sets of social media: one like Linkedin, Twitter, and Reddit; and the other which is more visual like Instagram, Snapchat, and Facebook. The first set is great for collecting text-based data, while the second is better for collecting and analyzing visual data.

Text-based

For example, if you were scraping data from a Twitter account, you could use a sentiment analysis library like TextBlob to label the text data.

#import library

from textblob import TextBlob

#scrape data from Twitter

tweets = get_tweets_from_account(username='example_user')

#label the data

labels = []

for tweet in tweets:

sentiment = TextBlob(tweet).sentiment

if sentiment.polarity > 0:

labels.append('positive')

elif sentiment.polarity < 0:

labels.append('negative')

else:

labels.append('neutral')

#output the labeled data

labeled_tweets = zip(tweets, labels)

print(list(labeled_tweets))Twitter is a great source of relevant comments and opinions, providing an easy way to collect and analyze public data. But more than that, as most Linkedin posts are from professionals, it is a great source of industry-specific data.

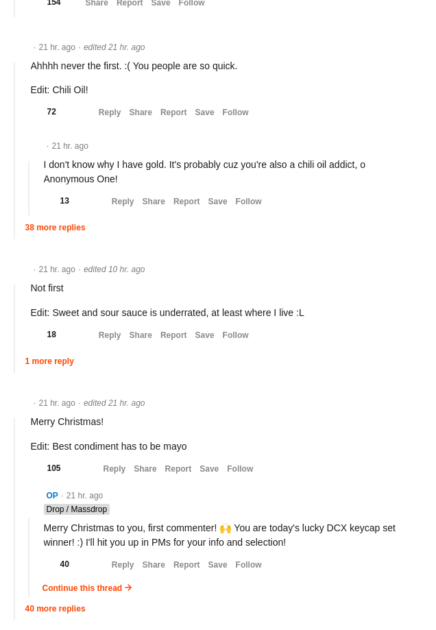

Reddit, for example, may not be your top social media source of data collection:

Did you, at any point, realize that the above Reddit thread was about a Mechanical Keyboard? Because it actually was! This is what the actual title looked like:

The fact that most text-based web data is wrong, is also applicable here.

Visual-based:

Social media like Instagram and Snapchat are great sources of visual data like images and videos. One thing to remember, though, is that the data must be labeled for the ML model to be trained properly. Like, for example, if you’re collecting images of cars, you need to label them as “car” “sedan” or “SUV”. You should also refer to the authorities of the OP, and the accuracy of their social profile, to make sure the collected visual data is high quality.

Below is an example of how to label visual image data after scraping from social media i.e Instagram. First, we will scrape the visual image data from Instagram using the Instagram API:

import requests

import json

# Get the access token

access_token = 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx'

# Specify the URL

url = 'https://api.instagram.com/v1/media/{media-id}/?access_token=' + access_token

# Make the request

resp = requests.get(url)

data = resp.json()

# Print the response

print(json.dumps(data, indent=2))Once the data is scraped, we can label the visual image data using a supervised machine learning algorithm:

# Import libraries

import pandas as pd

from sklearn.preprocessing import LabelEncoder

# Load the data

data = pd.read_csv('instagram_data.csv')

# Instantiate the LabelEncoder

le = LabelEncoder()

# Fit the LabelEncoder with the data

le.fit(data['label'])

# Encode the labels

data['label_encoded'] = le.transform(data['label'])

# Print the encoded labels

print(data['label_encoded'])3. Collecting Data from Surveys

- Demographic Analysis

- Regression Analysis

I can’t emphasize this one enough. Surveys can provide the highest quality data, and it all depends on your data collection strategy. Stats tell us that the average response rate for surveys is around 33%. So, if you’re looking to collect data from 100 people, you need to send out at least 300 surveys. The accuracy and consistency clearly depend on the individuals’ characteristics, like reliability, honesty, etc.

If you are collecting data via survey to train an ML model that requires accuracy, you need to make sure the surveyed individuals are reliable. The examples of ML models that require accuracy are:

- ones that predict the likelihood of a person buying a product.

- stock market bots

- medical diagnostics

- fraud detection systems

- self-driving cars

80% of people say they are truthful in their surveys, and this means you do not need to worry about data accuracy in their honesty. However, the actual accuracy of the data can vary depending on the complexity of the questions themselves.

For example, I am conducting a simple little survey about this post, and I’ll likely get accurate results:

Are you enjoying the post?

Please click the button below to submit your response.

Yes: 0

No: 0

And if the survey is for using the data to train the ML model that requires consistency, like natural language processing, you need to make sure that the individuals answer the questions in a consistent manner i.e. less misinformation, even in the price of the number of participants.

4. Observations

- Pattern Recognition

- Predictive Modeling

The observation method of data collection helps researchers to get a better understanding of a situation. It involves observing people in their natural environment and recording the data. Market research, where researchers observe people’s behavior in the stores, for instance, uses this method of data collection. You don’t need to rely on verbal or written responses from the participants. Just observe, collect, and analyze the data. To create games’ NPCs or virtual assistants, most of the data is collected by observing people’s behavior. The ways to observe people’s behavior are:

a. Direct observations – Observing people in their natural environment, such as when they’re shopping at a store or relaxing in a park.

b. Participant observations – Involves researchers interacting with the participants and observing their behavior.

c. Self-observations – The researcher observes his or her own behavior.

5. Experimentation for Data Collection

- Hypothesis Testing

- Predictive Modeling

Machine learning is mostly about experimentation anyways. You may set up an experiment to test a hypothesis and measure the results. In fact, the most accurate data is collected through controlled experiments. Yes, controlled experiments are expensive and time-consuming, but you can’t ignore the results. Some examples of experimental techniques for data collection in ML are:

a. A/B testing – To measure the impact of changes in the design or features of a website.

b. Split testing – Divides the participants randomly into two or more groups, with different treatments for each.

c. Multi-armed bandit – Gives participants multiple choices, while the experimenter is trying to find out which option is the most successful.

One more thing about experimentation; data collection after completion is just as important as during the experiment. You can use this valuable data to measure the results of the experiment. For example, if you are testing a new feature on a website, you can measure the success of the feature by looking at the user engagement data. However, it is important to note that experimentation is only one of the methodologies of data collection.

Bottom Line

Data’s role in machine learning is integral to its success, and its collector, whether a human or an AI-enabled system, plays an important role. Apart from time, effort, and skill, data collection requires the ability to identify data sources. No one or two sources will be sufficient, and you know that pretty well by now. Trained professionals who can find the best data sources are invaluable.